In his famous 1961 experiment on obedience to authority, Yale University psychologist Stanley Milgram set out to answer the question, “Could it be that Eichmann and his million accomplices in the Holocaust were just following orders? Could we call them all accomplices?”1

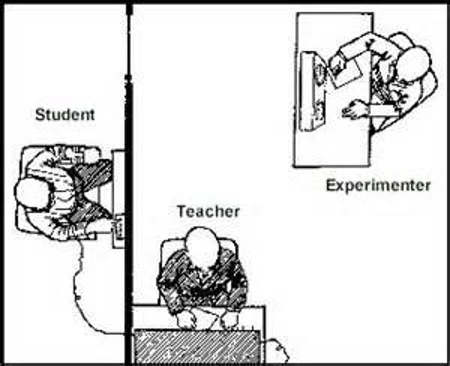

Three people made up each of Milgram’s experiments: the experimenter (the authority); the subject of the experiment (a volunteer who was told that he or she was a “teacher”); and the confederate (a plant, who was thought by the subject to be a “student” or “learner,” but who was actually an actor).

First, the “teacher” (subject of the experiment) was given a sample 45-volt shock. This was done to give the teacher a feeling for the jolts that the “student” (actor) would supposedly be receiving in the early stages of the experiment.

The electroshock generator at the desk of the teacher had 30 switches labeled from 15 to 450 volts. These voltage levels were also labeled “slight shock,” “moderate shock,” “strong shock,” “very strong shock,” “intense shock,” and “extreme intensity shock.” The final, forbidding-sounding labels read “Danger: Severe Shock” and “XXX.”

In a separate room, the learner was strapped to a chair with electrodes. Then the teacher read a list of word pairs to the student, and the student pressed a button to give his answer. If the student’s response was correct, the teacher would go to the next list of word pairs, but if the answer was wrong, the teacher would administer an electric shock to the student.

This pattern continued, with shocks increasing in 15-volt increments for each succeeding incorrect answer. In reality, no electric shocks were actually administered, but pre-recorded sounds of a person in pain were played at certain shock levels. At a higher level of the supposed shocks, the actor banged on the wall separating him from the teacher and complained of his heart condition. At an even higher shock level, all sounds from the student ceased.

Whenever a teacher became concerned and indicated he wanted to stop the experiment, the authority figure issued a pre-determined set of verbal prods, given in this order:

- Please continue.

- The experiment requires that you continue.

- It is absolutely essential that you continue.

- You have no other choice. You must go on.

If, after the fourth prod, the teacher still indicated a desire to stop, the experiment was halted. Otherwise, it was terminated only after the teacher delivered what he or she thought was the maximum 450-volt shock three times in succession to the same student.

Before conducting these experiments, Milgram polled fourteen Yale University senior-year psychology majors, all of whom believed that only a very small fraction of teachers would inflict the maximum voltage. He then informally polled his colleagues, who likewise believed only a small fraction would progress beyond giving a very strong shock. Additionally, forty psychiatrists from a medical school predicted that only one-tenth of one percent of the teachers would progress to the maximum shock level.

So they were surprised, as was Milgram himself, when they learned that approximately two-thirds of his subjects willingly, if reluctantly, administered what they thought was the maximum — potentially lethal — 450-volt shock to a student.

In his article, “The Perils of Obedience,” Milgram summarized the results of his groundbreaking study:

Stark authority was pitted against the subjects’ strongest moral imperatives against hurting others, and, with the subjects’ ears ringing with the screams of the victims, authority won more often than not. The extreme willingness of adults to go to almost any lengths on the command of an authority constitutes the chief finding of the study and the fact most urgently demanding explanation.2

That’s not good news to those of us confronting the lies and abuses of the authority figures in our lives. But a later, modified version of this experiment delivered some hope and insight. Milgram explains:

In one variation, three teachers (two actors and a real subject) administered a test and shocks. When the two actors disobeyed the experimenter and refused to go beyond a certain shock level, thirty-six of forty subjects joined their disobedient peers and refused as well.3

This modified experiment’s lesson for 9/11 skeptics is not difficult to grasp: If we continue pushing through the barriers of our own internal taboos and through the resistance of others, speaking confidently of the truth about 9/11, sticking to solid facts while avoiding speculation, others throughout the world will eventually join us in rejecting the official account of the horrific events of 9/11 — and the even more horrific aftermath of the so-called Global War on Terror.4

Another variation on the original experiment is particularly relevant to the challenges we face as we try to raise awareness of the atrocities for which our government is responsible — and consequently, for which we, in the last analysis, are also responsible. Milgram’s description and evaluation is potently clear:

I will cite one final variation of the experiment that depicts a dilemma that is more common in everyday life. The subject was not ordered to pull the lever that shocked the victim, but merely to perform a subsidiary task (administering the word-pair test) while another person administered the shock. In this situation, thirty-seven of forty adults continued to the highest level of the shock generator. Predictably, they excused their behavior by saying that the responsibility belonged to the man who actually pulled the switch. This may illustrate a dangerously typical arrangement in a complex society: it is easy to ignore responsibility when one is only an intermediate link in a chain of actions. (Emphasis added.)

The problem of obedience is not wholly psychological. The form and shape of society and the way it is developing have much to do with it. There was a time, perhaps, when people were able to give a fully human response to any situation because they were fully absorbed in it as human beings. But as soon as there was a division of labor things changed. Beyond a certain point, the breaking up of society into people carrying out narrow and very special jobs takes away from the human quality of work and life. A person does not get to see the whole situation but only a small part of it, and is thus unable to act without some kind of overall direction. He yields to authority but in doing so is alienated from his own actions.

Even Eichmann was sickened when he toured the concentration camps, but he had only to sit at a desk and shuffle papers. At the same time the man in the camp who actually dropped Cyclon-b into the gas chambers was able to justify his behavior on the ground that he was only following orders from above. Thus there is a fragmentation of the total human act; no one is confronted with the consequences of his decision to carry out the evil act. The person who assumes responsibility has evaporated. Perhaps this is the most common characteristic of socially organized evil in modern society.5

The results of Milgram’s original study, and subsequent studies like the one mentioned directly above, can still “shock” us a half-century later, as they did the world in the 1960s. For me, an undergraduate student at the time of the 1961 experiment, hearing the fact that two-thirds of average people like me would deliver a potentially lethal shock to a helpless and ill person was disturbing and life-changing. I had been reared by fairly authoritarian parents, so I knew there was the likelihood that I, too, would have followed those orders! From then on, I resolved to never blindly follow authority, but instead to listen to and trust my own inner guide and my conscience.

But do the findings from these studies apply not just to following orders, but also to firmly believing what an authority tells us? Or do we follow orders from a respected authority without necessarily deeply believing what this authority proclaims (e.g., that 19 Muslims attacked our country because they hate our freedoms)? Studies subsequent to Milgram’s suggest that we humans have a strong tendency to believe, as well as follow, an authority, especially if our fear is intensified and we already respect that authority. Such was the case when, just after the 9/11 attacks, Americans by and large trusted whatever the leader of their country, President Bush, told them.

An astonishing social experiment by third-grade teacher Jane Elliott demonstrates our human proclivity to believe a trusted authority — and even to develop our identity based on what this authority tells us about ourselves. Following the assassination of Martin Luther King, Jr., Elliott wanted to help her all-white third-graders in a small town in Iowa to understand prejudice. One day she told them: Today, the blue-eyed people will be on the bottom and the brown-eyed people on the top. What I mean is that brown-eyed people are better than blue-eyed people. They are cleaner than blue-eyed people. They are more civilized than blue-eyed people. And they are smarter than blue-eyed people.6

Brown-eyed children were allowed longer recess time and the use of the bigger playground equipment. They were permitted to be first in line for lunch and second helpings. Elliott instructed the blue-eyed people to not play with brown-eyed people unless asked and to sit in the back of the room. Each brown-eyed child was given a collar to put around the neck of a blue-eyed child. Throughout the day, the teacher reinforced that brown-eyed children were superior and blue-eyed children were inferior.

By lunchtime, the behavior of the children revealed whether they had brown or blue eyes:

The brown-eyed children were happy, alert, having the time of their lives. And they were doing far better work than they had ever done before. The blue-eyed children were miserable. Their posture, their expressions, their entire attitudes were those of defeat. Their classroom work regressed sharply from that of the day before. Inside of an hour or so, they looked and acted as if they were, in fact, inferior. It was shocking.7

But even more frightening was the way the brown-eyed children turned on their friends of the day before.8

The next day Jane Elliott reversed the experiment, labeling the blue-eyed children as superior; she saw the same results, but in reverse. In conclusion:

At the end of the day, she told her students that this was only an experiment and there was no innate difference between blue-eyed and brown-eyed people. The children took off their collars and hugged one another, looking immensely relieved to be equals and friends again. An interesting aspect of the experiment is how it affected learning. Once the children realized that their power to learn depended on their belief in themselves, they held on to believing they were smart and didn’t let go of it again.9

But surely, adults would be able to discern and resist this kind of social pressure and would be immune to it, right? Surely adults would not allow their very identity to be affected by such manipulation, would they?

We shall find out. In a study strikingly similar to third-grade teacher Jane Elliott’s, social psychologist Philip Zimbardo conducted his famous Stanford Prison Experiment in the early 1970s. It proves that the assumption we make about adults’ immunity to social pressure, though understandable, is for the most part wrong.

Zimbardo and his colleagues used 24 male college students as subjects, dividing them arbitrarily into “guards” and “inmates” within a mock prison. He instructed the “guards” to act in an oppressive way toward the “prisoners,” thereby assuming the role of authority figures. Zimbardo himself became an authority figure to all of the student subjects, since he both authored the experiment and played the role of prison superintendent.10

All students knew this was an experiment, but, to the surprise of everyone — even the experimenters! — the students rapidly internalized their roles as either brutal, sadistic guards or emotionally broken prisoners. Astonishingly, the “prison system” and the subsequent dynamic that developed had such a deleterious effect on the subjects that the study, which was to last a fortnight, was terminated on the sixth day. The only reason it was called to an early halt is that graduate psychology student Christina Maslach — whom Philip Zimbardo was dating and who subsequently became his wife — brought to his attention the unethical conditions of the experiment.11

As with the Milgram and Elliott studies, the Zimbardo experiment demonstrates the human tendency to not only follow authority but also to believe what that authority tells us. This conclusion is all the more astounding given that the parties to his study knew in advance that it was simply an experiment.12 The Zimbardo and Elliott studies also demonstrate that our very identities are affected by what a person in authority proclaims about us — and that peer pressure powerfully reinforces this tendency. It’s no wonder, then, that Milgram’s adult subjects, Elliott’s third-graders, and Zimbardo’s college-age students committed atrocities, even in violation of their own cherished moral values.

Zimbardo was called as an expert defense witness at the court-martial of night-shift prison guard Ivan “Chip” Frederick, who was one of the infamous “Abu Ghraib Seven.” Based on his experience with the Stanford Prison Experiment, Zimbardo argued in this court case that it was the situation that had brought out the aberrant behavior in an otherwise decent person. While the military brass maintained that Frederick and his fellow guards were a few “bad apples” in an otherwise good U.S. Army barrel, Zimbardo contended they were normal, good soldiers in a very, very bad barrel.

Chip Frederick pleaded guilty and received a sentence of eight years in prison; Zimbardo’s testimony had little effect on the length of the term. The other guards, also found guilty, received sentences ranging from zero to ten years.

What is the truth about these night-shift guards? Were they a few “bad apples” in a good barrel or was the barrel itself contaminated? Well, the U.S. Army itself has since confirmed that, as of October 2001, there were more than 600 accusations of abuse of detainees. Many more than that number went unreported, including abuse of “ghost detainees” — those unfortunate souls who, under the control of the CIA, were never identified and were often “rendered” to torture states. Many of these victims were essentially “disappeared.” We can conclude that there had to have been many “ghost abusers” who were never held accountable.

To support his accusation that the barrel, rather than the apples, was toxic, Zimbardo wrote a book, The Lucifer Effect, which puts the system itself on trial. In it, he makes the case that the orders, the expectations, and the pressure to torture came from the very top of the chain of command. Zimbardo’s detailed analysis finds Secretary of Defense Donald Rumsfeld, Central Intelligence Agency Director George Tenet, Lt. Gen. Ricardo Sanchez, Maj. Gen. Geoffrey Miller, Vice President Dick Cheney, and President George W. Bush all guilty.

His conclusion: “This barrel of apples began rotting from the top down.” At the same time, The Lucifer Effect’s author also praises the many heroes — the whistle-blowers from the bottom to the top of the military hierarchy, who risked their lives and careers to stand up to, and to stand strong against, the toxic system.13

Why do some people conform to the expectations of the system while others muster the courage to remain true to their principles? Throughout the sections of this essay, there are pointers that answer this question from the perspective of developmental and depth psychology. But to address such an immensely important subject in detail would require a separate work. In the meantime, Zimbardo has begun the exploration from a social psychologist’s viewpoint by declaring that we are all “heroes in waiting” and by offering suggestions on how to resist undesirable social influences.14

It is my firm belief that skeptics of any paradigm-shifting, taboo subject who publicly expose lies and naked emperors are heroes who have come out of hiding. I believe this to be also true of 9/11 skeptics. All such skeptics have suffered the ridicule and wrath of those emperors, their minions, and the just plain frightened.

These three studies — Milgram’s study on obedience to authority, Elliott’s “Blue Eyes/Brown Eyes Exercise,” and Zimbardo’s Stanford Prison Experiment — demonstrate our human proclivity to trust and obey authority.

Now comes another question: Is this predisposition of humans to depend upon their leaders a trait that is encoded genetically? Evidence appears to support a “yes” answer.

To survive as babies and young children, we automatically look to our parents for confirmation of safety or danger.

Chimpanzees, with whom our genetics match at least 94%,15 generally have one or more alpha male leaders in a troop. Often these leaders are chosen by the females.16 Bonobos, with a genome close to that of the chimpanzees and thus to humans, have a matriarchal system with a female leader.17 And, of course, human communities have leaders. Thus, the need for a leader, for an authority, appears to be genetically hardwired.

If we have been reared in an authoritarian family and school system, this tendency to rely on authority figures for confirmation of reality is likely reinforced. Conversely, if we are reared in a family, a school system, and a cultural context that rewards critical thinking and respects our individual feelings and needs, the tendency to rely on authority figures is likely weakened.

In American society, many of our officials routinely lie to us and abuse us. Even though the lies and abuse have been well documented, many citizens continue to look to these officials for truth and for security — especially when a frightening incident has taken place that heightens their anxiety and insecurity. This strong tendency to believe and obey authority, then, is yet another obstacle with which skeptics of the official 9/11 account must contend.

By unquestioningly believing and obeying authority, we develop and perpetuate faulty identities and faulty beliefs. As a result, we make poor decisions — decisions that often hurt ourselves and others. Perpetuating faulty identities and faulty beliefs and making bad decisions can also stem from other human tendencies, which we will examine in our next four sections: Doublethink, Denial and Cognitive Dissonance, Conformity, and Groupthink.

Copyright © Frances T. Shure, 2014

Endnotes

- Stanley Milgram, Obedience to Authority: An Experimental View (Harper & Row Publishers, Inc., 1974).

- “The Perils of Obedience – Stanley Milgram,” “Harpers Magazine article of 1974,” age-of-the-sage.org, http://www.age-of-the-sage.org/psychology/milgram_perils_authority_1974.html.

- Ibid.

- “Body Count: Casualty Figures after 10 Years of the ‘War on Terror’: Iraq, Afghanistan, Pakistan,” Physicians for Social Responsibility, https://calculators.io/body-count-war-on-terror.

- Milgram, “The Perils of Obediance.”

- Dennis Linn, Sheila Fabricant Linn, and Mathew Linn, Healing the Future: Personal Recovery from Societal Wounding (Paulist Press, 2012) 56–60.

- Ibid.

- Ibid.

- Dennis, Sheila, and Matthew Linn, Healing the Future, 57–58.

William Peters, A Class Divided: Then and Now, expanded ed. (New Haven: Yale University Press, 1971). This book includes an account of Jane Elliott conducting a similar experiment for adult employees of the Iowa Department of Corrections.

Documentary films that also tell this story are “The Eye of the Storm,” ABC News, 1970, distributed in DVD format by Admire Productions, 2004, and “A Class Divided,” Yale University Films, 1986, presented on Frontline and distributed in DVD format by PBS Home Video. Both programs include study guides for use with groups. - “The psychology of evil: Philip Zimbardo,” September 23, 2008, TED, YouTube video, 23:10, https://www.youtube.com/watch?v=OsFEV35tWsg. Zimbardo had a dual relationship as director of the experiment and as a participant and admits becoming blinded to this and not seeing clearly his emotional involvement during the experiment. This is a powerful TED Talk about how average people will do evil in certain situations.

- Philip Zimbardo, The Lucifer Effect: Understanding How Good People Turn Evil (Random House Trade Paperbacks, 2008).

- “The Stanford Prison Experiment 50 Years Later: A Conversation with Philip Zimbardo,” April 6, 2021, Stanford Historical Society, YouTube video, 1:23:23, https://www.youtube.com/watch?v=3r-wZa3a6DY. Christina Maslach, Zimbardo’s wife, joins this fascinating interview.

- Zimbardo, The Lucifer Effect, 324–443.

- Ibid, 444–488.

- JR Minkel, “Human-Chimp Gene Gap Widens from Tally of Duplicate Genes,” Scientific American, December 20, 2006, http://www.scientificamerican.com/article.cfm?id=human-chimp-gene-gap-wide.

- “Chimpanzee,” Wikipedia, http://en.wikipedia.org/wiki/Chimpanzee.

- “Bonobo,”Wikipedia, http://en.wikipedia.org/wiki/Bonobo.

Note: Electronic sources in the endnotes have been archived. If they can no longer be found by a search on the Internet, readers desiring a copy may contact Frances Shure using this email address: franshure [at] estreet.com

You must replace the “[at]” in the email address above with the “@” symbol and remove the spaces for it to be a valid email address. The address above is listed as such to prevent spam from being sent to this email address.